LGBTQ+ Pride Month: Turing Honored on British Bill

Wednesday, June 23rd, 2021June is LGBTQ+ Pride Month. All month long, Behind the Headlines will feature lesbian, gay, bisexual, transgender, and queer or questioning pioneers in a variety of areas.

On what would have been his 109th birthday, the English mathematician, computer pioneer, and codebreaker Alan Turing is getting a very special gift: a 50-pound (£50) note. It’s not just an old £50 bank note, however. This bank note—and millions of others—will have his face on it.

Following a public nomination process in 2019, Turing was selected to be the new face of the £50 note. His image will replace images of the engineer and scientist James Watt and the industrialist and entrepreneur Matthew Boulton. An image of Elizabeth II will remain on the obverse side of the note, or the side that bears the principal design.

Turing was recognized not only for his important contributions to the development of electronic digital computers, but also for the discrimination he faced as a gay man. After World War II (1939-1945), Turing was prosecuted for his relationship with a man. He was given the choice of either imprisonment or probation with the condition of undergoing female hormone treatment. On June 7, 1954, at the age of 41, Turing took his own life.

In 2009, the British government issued an apology. Four years later, Turing was given a royal pardon, releasing him for the legal penalties for his crime. In 2017, the Turing Law was passed, which pardoned thousands of gay and bisexual men who had been convicted of sexual offenses that have since been eliminated.

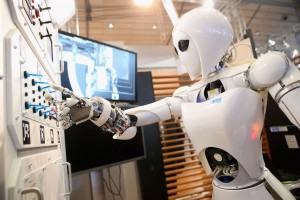

Alan Turing (far right) was an English mathematician and computer pioneer. He made important contributions to the development of electronic digital computers.

Credit: Heritage-Images/Science Museum, London

Turing was born on June 23, 1912, in London. He studied mathematics at Cambridge University and Princeton University. In 1936, he developed a hypothetical computing machine—now called the Turing machine—that could, in principle, perform any calculation. The device had a long tape divided into squares on which symbols could be written or read. The tape head of the machine could move to the left or to the right. The machine also had a table to tell it the order in which to carry out operations. The Turing machine became an important model for determining what tasks a computer could perform. During World War II, Turing helped crack German codes.

After the war, he worked on a project to build the first British electronic digital computer. In 1950, he proposed a test for determining if machines might be said to “think.” This test, now called the Turing test, is often mentioned in discussions of artificial intelligence (AI).